TL;DR

- India's Digital Personal Data Protection Rules, 2025 turn consent into a formalized infrastructure: detailed notice requirements, 'verifiable consent' for children/vulnerable persons, and a regulated Consent Manager ecosystem.

- At the same time, large parts of AI training and analytics can be pushed into 'research/statistics' or 'legitimate use' buckets where the Act does not apply - including consent and withdrawal.

- This creates a structural tension: front-end consent looks strong, but back-end AI can remain largely consent-free if framed as research, especially when using publicly available personal data.

- If India wants a credible, consent-coherent AI regime, it will need to tie model training and profiling much more tightly to the same notice, purpose and withdrawal logic it has just 'weaponized' at the service layer.

What I wrote earlier - and what has changed

At the beginning of 2025, I published “India’s Failed Personal Data Protection Law: A Missed Opportunity”. I argued that the new framework prioritized centralized control and state-approved intermediaries over user-first, decentralized privacy models. I was particularly critical of the Consent Manager concept as an exclusionary, bureaucratic construct that risked isolating India from global, first-party, and decentralized consent architectures.

Since then, the Digital Personal Data Protection Rules, 2025, have been notified. They do more than operationalize the Act; they reveal what ‘consent’ will actually mean in India - and where it will be consciously side-stepped.

This article is not about re-litigating every aspect of the law. It focuses on one question that matters for DPOs, lawyers, and policy people:

What happens when a legal system builds an elaborate notice-and-consent machine - and then lets AI slip out through the ‘research’ door?

Thanks to Luis Alberto Montezuma for posting India’s notified final Digital Personal Data Protection (DPDP).

The timeline: when consent really starts to bite

The Rules phase in the DPDP regime over time:

- Immediately in force:

Definitions and institutional machinery - the Board, governance provisions, the high-level framework. - After 12 months:

The Consent Manager regime becomes operational (registration, conditions, duties). - After 18 months:

The bulk of operational duties kick in: notice and consent, security safeguards, children’s data and verifiable consent, research/statistics carve-outs, storage limitation, rights handling, and grievance redressal.

So ‘weaponized’ consent is real, but it’s also slow-burn. Controllers and future Consent Managers have a long on-ramp; AI developers have an equally long window to embed their practices behind the ‘research’ curtain.

What India gets right: consent as a serious legal instrument

Notice as the anchor

The Rules define what a valid notice must look like before consent is obtained. It must:

- Be presented independently of other information (not buried in the Terms of Service).

- Be understandable on its own, without cross-referencing multiple documents.

Provide, in clear language:

- An itemized description of the personal data to be processed.

- The specific purposes and a description of the goods/services or uses enabled.

Include a specific link or mechanism that lets the data principal:

- Withdraw consent with ease comparable to giving it.

- Exercise their data protection rights.

- Lodge a complaint with the Data Protection Board.

This is not vague “by using this site you agree” language. It is a structured, purpose-bound notice requirement that, on paper, is stricter and more granular than many cookie banners we see today in GDPR jurisdictions.

“The Rules treat notice as infrastructure, not decoration - consent must be anchored

in itemized data and specific purposes, with a reversible choice on top.”

For legal and DPO audiences, this is familiar territory: purpose limitation, transparency and genuine withdrawal rights, codified in a very prescriptive way.

Verifiable consent for children and protected persons

The Rules introduce ‘verifiable consent’ for two categories:

- Children, where verifiable parental consent is required; and

- Persons with disabilities with a lawful guardian, where the guardian consents on their behalf.

In both cases, the controller must perform due diligence to ensure that:

- The person giving consent is an identifiable adult (for children), or

- A lawful guardian duly appointed under the relevant guardianship law.

Verification can rely on:

- Identity/age details already held by the data fiduciary; or

- Identity/age details or virtual tokens from authorized entities or Digital Locker providers.

This is more than a checkbox; it ties consent to trusted identity infrastructure. In a world of AI-mediated services, age-gating and online harms, this is exactly the kind of attribute-level assurance regulators claim to want.

Consent Managers: consent as regulated infrastructure

My earlier critique of the Consent Manager model still stands: India has chosen a centralized, state-approved, capital-barriered intermediary instead of enabling open, first-party or decentralized consent architectures.

But from a consent-law perspective, the model is worth unpacking, because it turns consent into a shared, regulated rail.

Who can be a Consent Manager?

Under the Rules and their schedules, a Consent Manager must:

- Be an Indian-incorporated company with a minimum net worth threshold.

- Demonstrate sufficient technical, operational, and financial capacity.

- Hard-wire specific obligations and conflict-of-interest safeguards into its constitutional documents (MoA/AoA).

Undergo independent certification that:

- Its platform for managing consent complies with standards published by the Board.

- Its technical and organizational measures are adequate.

There is no room here for small, informal players or unregulated ‘CMP’ vendors. Consent management is repositioned as licensed infrastructure.

What must a Consent Manager do?

The obligations effectively build a consent-rail mandate. Consent Managers must:

- Enable individuals to give, manage, review, and withdraw consent for one or multiple data fiduciaries through a unified interface.

- Relay personal data or consent instructions such that the data is not readable by the Consent Manager itself, avoiding a new surveillance intermediary.

Maintain detailed records of:

- Consents given, denied, or withdrawn;

- Notices associated with each consent;

- Any onward sharing to transferee data fiduciaries.

Provide:

- User access to this record; and

- A machine-readable export on request.

- Retain records for a minimum number of years (with extensions where law or agreement requires).

Consent Managers must also:

- Act in a fiduciary capacity towards the individual; and

- Implement conflict-of-interest controls vis-à-vis data fiduciaries (promoters, related parties, shareholdings, etc.).

For lawyers and DPOs, this signals the direction of travel: centralized but formally strong consent governance for operational data processing.

Where the consent story stops: ‘legitimate use’ and research exemptions

If the story ended with granular notice, verifiable consent, and audited Consent Managers, India would have a strong - if centralized - consent regime.

Sadly, it does not end there.

State functions and ‘legitimate use’

The Act, read with the Rules, allows State bodies and their instrumentalities to process personal data without consent for a wide range of public-task scenarios - provision of subsidies, benefits, services, and issuance of certificates, licences, and permits - subject to standards around lawfulness, necessity, purpose limitation, minimization, retention, security, and transparency.

This is the DPDP equivalent of a ‘public interest / legitimate use’ legal basis. That is not unusual; most data protection regimes have an analogue. But it immediately creates a non-consent zone for large, data-hungry State AI systems: welfare targeting, risk scoring, fraud analytics, and everything adjacent.

Research, archiving, and statistics - AI’s escape hatch

The real escape hatch is the research/statistics/archiving exemption. The Rules provide that the Act does not apply to processing of personal data necessary for research, archiving, or statistical purposes, if carried out in accordance with certain standards (lawfulness, safeguards, etc.).

If AI training on personal data is characterized as ‘research’ or ‘statistical’ processing, and the controller can argue that it follows those standards, then the DPDP Act - including its consent provisions - simply does not apply to that processing.

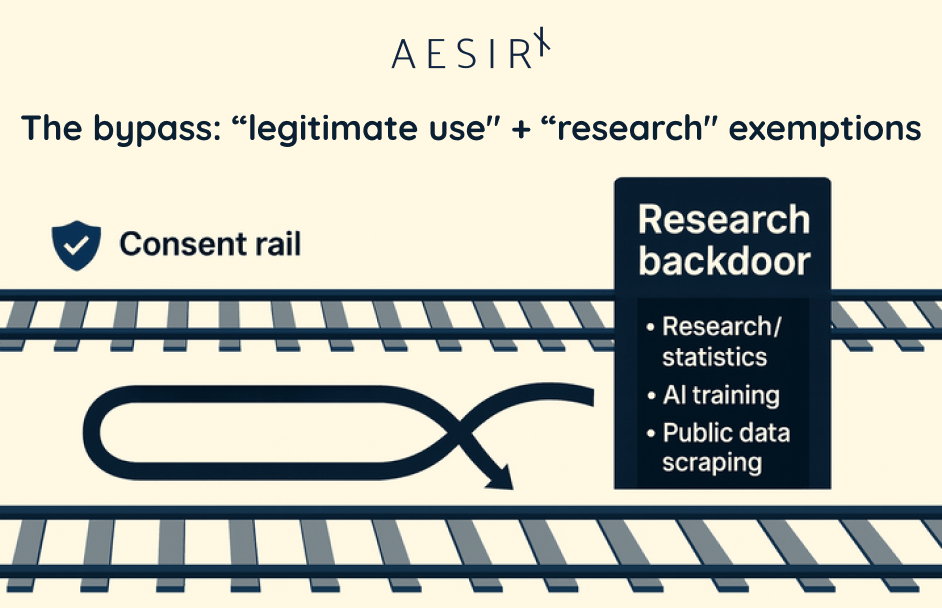

That produces a sharp asymmetry:

Service-level uses of data are locked into a heavy consent rail; model-level uses can be pushed into a consent-free research corridor.

For DPOs and lawyers advising multinational groups, this raises immediate questions:

- How often will AI developers (inside or outside India) frame training as ‘research’ or ‘statistical’ to avoid consent and withdrawal constraints?

- What happens when operational data collected on the basis of granular notice flows silently into a research exception for general-purpose model training?

“The more AI training is framed as ‘research’, the less India’s elaborate consent rail actually

touches the decisions that shape people’s digital lives.”

Publicly available personal data and AI training

The DPDP framework has already been criticized for its treatment of publicly available personal data and the breadth of its ‘legitimate use’ categories. The Rules do little to narrow these openings in the AI context.

Combine three ingredients:

- Data scraped from public sources (social media, forums, public registers).

- Classification of the activity as ‘research’ or ‘statistical’ processing for AI development.

- Reliance on the research/statistics exemption to move that processing outside the Act entirely.

From a consent perspective, this means:

- The individual whose data is scraped will often never see a DPDP-compliant notice, let alone have a realistic consent or withdrawal moment.

- The Consent Manager infrastructure - with its logs, fiduciary duties and interoperability - never touches these flows. It is structurally bypassed.

For example, a large platform or vendor could scrape years of publicly visible social media posts, forum discussions and profile data, label the activity as ‘statistical research’ for AI development, and train general-purpose models on that corpus. None of the individuals whose posts are ingested would ever see a DPDP-compliant notice, and there would be no meaningful way for them to withdraw consent or opt out of the training step once the research label has been applied.

Strong formal consent for operational services coexists with a consent-less zone for large-scale AI training on public personal data. That is not a bug; it is a design choice.

Dynamic consent vs static research: three hard questions

For a consent-focused audience, three practical questions matter more than any branding of roles or schedules.

Does withdrawal actually reach the model?

The Rules require that:

- Notices provide an easy way to withdraw consent comparable to giving it.

- Data fiduciaries (and Consent Managers) implement rights-handling and grievance mechanisms within defined timelines.

But if the relevant AI processing step is classified under the research/statistics exemption, those rights do not attach to the training step at all. Withdrawal may:

- Stop specific downstream profiling, targeting or enrichment;

- Have no effect on the trained model that already encodes patterns derived from the person’s data.

This is the same structural problem we see globally:

Consent withdrawals are treated as front-end events, while model training is treated as a back-end research operation that is legally insulated from them.

Can Consent Managers support genuinely dynamic, contextual AI consent?

On paper, Consent Managers must:

- Maintain a detailed consent ledger.

- Provide machine-readable exports.

- Offer a unified plane for giving and withdrawing consent across multiple data fiduciaries.

In a multi-modal, ambient AI environment (voice assistants, smart devices, workplace copilots, in-car systems), meaningful consent becomes highly contextual and dynamic:

- Different models and purposes.

- Different data sources (first-party, third-party, public web).

- Different risk profiles (search assistant vs credit scoring).

The Rules still implicitly assume a service/app-centric, account-centric worldview. The risk is that Consent Managers become excellent at managing classical web/app consent, while the truly consequential AI uses live entirely in the ‘research/statistics’ lane.

What happens to accountability?

A consent system only works if:

- Individuals can understand what they are agreeing to;

- Regulators can trace how data moved and was used;

- Courts can test proportionality and necessity against concrete records.

By pushing AI training into research exemptions, India risks creating a layered reality:

- Visible layer: granular consent, verifiable for vulnerable users, independent notices, Consent Managers with exportable logs.

- Invisible layer: model training and experimentation, structurally insulated from individual consent, yet shaping downstream services that heavily affect individuals.

For DPOs and privacy lawyers, that is a familiar pattern from other jurisdictions - but here, it is baked in at design stage rather than emerging as a loophole.

Why this matters beyond India

Even if you never advise an Indian entity, this framework will reach your work.

- Cross-border data flows

Multinationals will want to reuse data or models across India, the EU, and the US. Inconsistencies between the DPDP research carve-outs and GDPR’s constraints on further processing will create complex risk matrices and potential enforcement exposure. - Adequacy and interoperability

A regime that looks strong on front-end consent but permissive on AI research may complicate any future adequacy-style assessments or ‘trusted partner’ status. - Precedent value

Other jurisdictions watching India may be tempted to copy the ‘licensed Consent Manager + broad research exemption’ model, claiming they’ve solved consent while giving AI a wide berth from individual control.

For practitioners, the key is to see through the formalism: strong consent rules on paper do not automatically protect individuals if the most powerful data uses are built on exemptions.

What a consent-coherent AI regime would look like

If we take India’s own Rules seriously, a more coherent model for AI and consent would involve at least four shifts.

Binding model training to purpose and notice

Where personal data (including publicly available personal data) is used for training, there should be a clear, disclosed purpose cluster - not a generic ‘research’ label that dissolves consent.

Individuals should have at least meaningful transparency about whether and how their data feeds into:

- General-purpose models; and

- Domain-specific models (e.g., finance, health, education, employment).

Extending the logic of ‘verifiable consent’ to high-risk AI uses

The same rigour applied to children and persons with disabilities could be extended to high-risk AI contexts, such as:

- Credit and lending;

- Employment and education;

- Biometric and emotion recognition systems.

Consent (or other legal bases) in these contexts should be identity-anchored but context-scoped: strong enough to support accountability, narrow enough to avoid universal tracking.

Limiting the research exemption for AI training on identifiable data

Research/statistics carve-outs could explicitly exclude large-scale behavioral or biometric training on identifiable or easily re-identifiable personal data, unless consent or another strong, testable legal basis is present.

At minimum, training on such data should remain within the Act’s scope, even if some obligations are relaxed. ‘Research’ should not be a magic word that dissolves all individual control.

Using consent infrastructure to govern AI, not just websites

If you are going to build a licensed Consent Manager rail with machine-readable logs and exports, it should also become the control layer for AI uses, not just for classical web/app processing.

That means binding AI use-cases to consent artefacts (or other bases) in a way that can be audited:

- Which data flows from which contexts fed into which models?

- Under which notice and legal basis?

- What does withdrawal of consent actually do for each of those flows?

From my side, I still believe in a first-party, context-scoped consent model, where:

- Data is collected and processed as close as possible to the user and the specific service.

- Identity and attributes (age, jurisdiction, etc.) are handled via privacy-preserving proofs, not central surveillance hubs.

- Any anchor (including blockchain) is used for accountability, not tracking.

That is the pattern we have been working on in the AesirX ecosystem - not as a silver bullet, but as proof that consent-coherent design is technically feasible, also for AI-adjacent services.

When a law weaponizes consent - and then lets AI slip into ‘research’

India’s DPDP Rules show real ambition: notice and consent are taken seriously, formalized and given infrastructure. The law quite literally weaponizes notice and consent - itemized purposes, verifiable consent, licensed Consent Managers, machine-readable logs.

But by treating AI training as a kind of legally insulated research activity, the regime also creates the perfect escape route: the most powerful uses of data can slip into ‘research’, where individual consent, withdrawal and dynamic control largely disappear.

For DPOs, privacy lawyers, and policy professionals, the task now is not just to read the Rules, but to pressure-test every AI use that tries to hide in that corridor:

Whenever you see ‘research’ or ‘statistics’ invoked, ask:

- What is the real purpose?

- Could this be done under a consent-consistent model instead?

- What does withdrawal mean in practice for this use - does it reach the model, or only the UI?

Because if a system weaponizes consent at the surface while outsourcing AI to a consent-free research zone underneath, then the real governance battle is not about banners or Consent Managers at all. It is about whether we are willing to insist that the same consent logic we impose on ordinary services also applies to the models quietly reshaping our societies.

For practitioners, a simple rule of thumb follows from all this:

- Treat any use of the ‘research/statistics’ label in AI projects touching India as a red flag for consent and accountability.

- Insist on a clear, documented chain from notice and legal basis to model training and downstream use, even where the law appears to allow a broad exemption.

- Design for the assumption that individuals, regulators, and courts will increasingly expect the same consent logic that governs services to reach the models behind them.

If we do not, ‘research’ risks becoming the place where the most consequential uses of personal data are precisely those that are least constrained by consent.

Ronni K. Gothard Christiansen

Technical Privacy Engineer & CEO, AesirX.io